The enterprise voice AI split: Why architecture — not model quality — defines your compliance posture

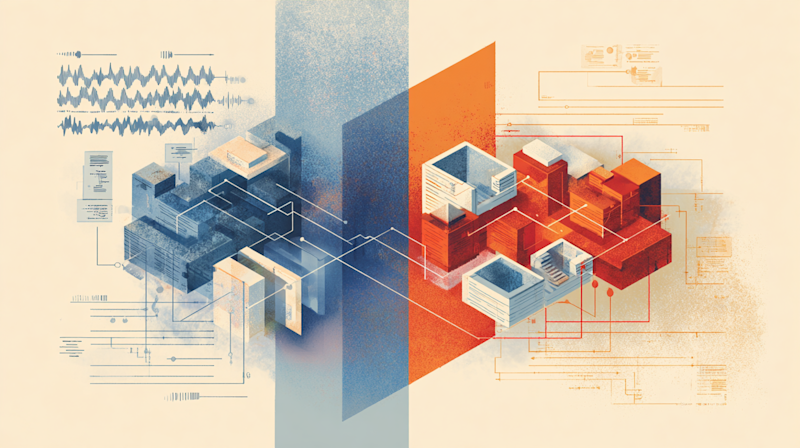

For the past year, enterprise decision-makers have faced a rigid architectural trade-off in voice AI: adopt a "Native" speech-to-speech (S2S) model for speed and emotional fidelity, or stick with a "Modular" stack for control and auditability. That binary choice has evolved into distinct market segmentation, driven by two simultaneous forces reshaping the landscape. Credit: Made by VentureBeat in Midjourney Credit: Generated by author using Google Nano Banana Pro Credit: Generated by author using Google Nano Banana Pro What was once a performance decision has become a governance and compliance decision, as voice agents move from pilots into regulated, customer-facing workflows. On one side, Google has commoditized the "raw intelligence" layer. With the release of Gemini 2.5 Flash and now , Google has positioned itself as the high-volume utility provider with pricing that makes voice automation economically viable for workflows previously too cheap to justify. Gemini 3.0 Flash responded in August with a 20% price cut on its Realtime API, narrowing the gap with Gemini to roughly OpenAI - still meaningful, but no longer insurmountable. 2x On the other side, a new "Unified" modular architecture is emerging. By physically co-locating the disparate components of a voice stack-transcription, reasoning and synthesis-providers like Together AI are addressing the latency issues that previously hampered modular designs. This architectural counter-attack delivers native-like speed while retaining the audit trails and intervention points that regulated industries require. Together, these forces are collapsing the historical trade-off between speed and control in enterprise voice systems. For enterprise executives, the question is no longer just about model performance. It's a strategic choice between a cost-efficient, generalized utility model and a domain-specific, vertically integrated stack that supports compliance requirements - including whether voice agents can be deployed at scale without introducing audit gaps, regulatory risk, or downstream liability. Understanding the three architectural paths These architectural differences are not academic; they directly shape latency, auditability, and the ability to intervene in live voice interactions. The enterprise voice AI market has consolidated around three distinct architectures, each optimized for different trade-offs between speed, control, and cost. S2S models - including Google's Gemini Live and - process audio inputs natively to preserve paralinguistic signals like tone and hesitation. But contrary to popular belief, these aren't true end-to-end speech models. They operate as what the industry calls "Half-Cascades": Audio understanding happens natively, but the model still performs text-based reasoning before synthesizing speech output. This hybrid approach OpenAI's Realtime API , closely mimicking human response times where pauses beyond 200ms become perceptible and feel unnatural. The trade-off is that these intermediate reasoning steps remain opaque to enterprises, limiting auditability and policy enforcement. achieves latency in the 200 to 300ms range Traditional chained pipelines represent the opposite extreme. These modular stacks follow a three-step relay: Speech-to-text engines like Deepgram's Nova-3 or transcribe audio into text, an LLM generates a response, and text-to-speech providers like ElevenLabs or AssemblyAI's Universal-Streaming synthesize the output. Each handoff introduces network transmission time plus processing overhead. While individual components have optimized their processing...

Preview: ~500 words

Continue reading at Venturebeat

Read Full Article