Building an AI agent inside a 7-year-old Rails monolith

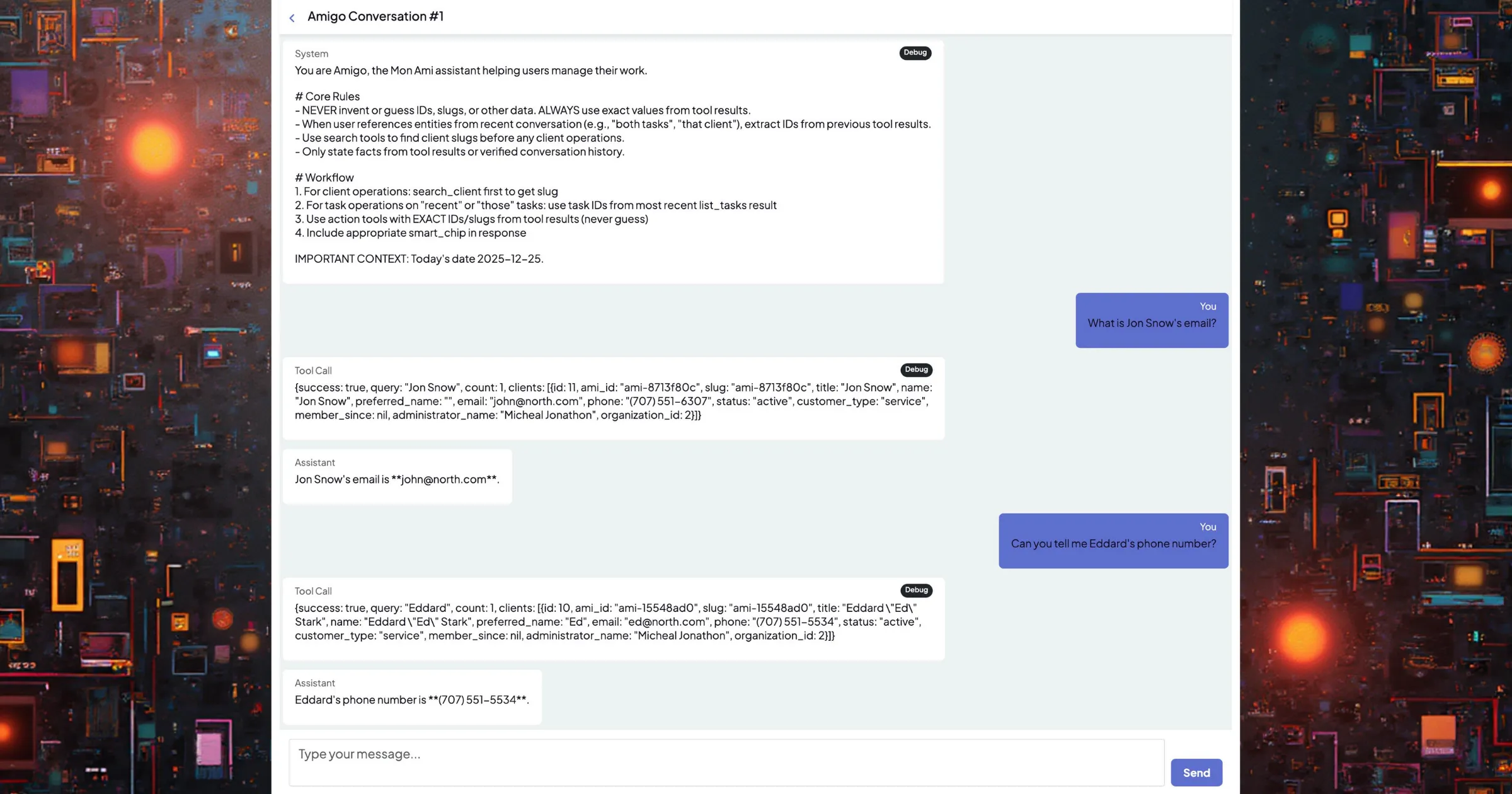

I (incorrectly) convinced myself over the last few months that there’s no low-hanging fruit that would work for our product and business. This is a story of just how wrong I was. I was at SF Ruby , in San Francisco, a few weeks ago. Most of the tracks were, of course, heavily focused on AI. Lots of stories from people building AIs into all sorts of products using Ruby and Rails, They were good talks. But most of them assumed a kind of software I don’t work on - systems without strong boundaries, without multi-tenant concerns, without deeply embedded authorization rules. I kept thinking: this is interesting, but it doesn’t map cleanly to my world. At Mon Ami, we can’t just release a pilot unless it passes strict data access checks. Then I saw a talk about using the RubyLLM gem to build a RAG-like system. The conversation (LLM calls) context was augmented using function calls (tools). This is when it clicked. I could encode my complicated access logic into a specific function call and ensure the LLM gets access to some of our data without having to give it unrestricted access. RubyLLM is a neat gem that abstracts away the interaction with many LLM providers with a clean API. gem "ruby_llm" It is configured in an initializer with the API keys for the providers you want to use. RubyLLM.configure do |config| config.openai_api_key = Rails.application.credentials.dig(:openai_api_key) config.anthropic_api_key = Rails.application.credentials.dig(:anthropic_api_key) # config.default_model = "gpt-4.1-nano" # Use the new association-based acts_as API (recommended) config.use_new_acts_as = true # Increase timeout for slow API responses config.request_timeout = 600 # 10 minutes (default is 300) config.max_retries = 3 # Retry failed requests end # Load LLM tools from main app Dir[Rails.root.join('app/tools/**/*.rb')].each { |f| require f } It provides a Conversation model as an abstraction for an LLM thread. The Conversation contains a set of Messages. It also provides a way of defining structured responses and function calls available. AVAILABLE_TOOLS = [ Tools::Client::SearchTool ].freeze conversation = Conversation.find(conversation_id) chat = conversation.with_tools(*AVAILABLE_TOOLS) chat.ask 'What is the phone number for John Snow?' A Conversation is initialized by passing a model (gpt-5, claude-sonnet-4.5, etc) and has a method for chatting to it. conversation = Conversation.new(model: RubyLLM::Model.find_by(model_id: 'gpt-4o-mini')) RubyLLM comes with a neat DSL for defining accepted parameters (the descriptions are passed to the LLM as context since it needs to decide if the tool should be used based on the conversation). The tool implements an execute method returning a hash. The hash is then presented to the LLM. This is all the magic needed. class SearchTool < BaseTool description 'Search for clients by name, ID, or email address. Returns matching clients.' param :query, desc: 'Search query - can be client name, ID, or email address', type: :string def execute(query:) end end We’ll now build a modest function call and a messaging interface. The function call allows searching a client using Algolia and ensuring the resulting set is visible to the user (by merging in the pundit policy). def execute(query:) response...

Preview: ~500 words

Continue reading at Hacker News

Read Full Article